Blog

Direct Preference Optimisation

- January 21, 2024

- Posted by: William Dorrington

- Category: Beginner Data Science Frontiers Level Machine Learning Reinforcement Learning

LLMS and IFaaF

The advent of Large Language Models (LLMs) has been a game changer for Generative Artificial Intelligence (Gen AI). Most would have experienced or seen Gen AI in one capacity or another by now – from ChatGPT creating weird and wonderful passages of texts and images through to Microsoft 1st Party Applications such as Dynamics 365 infused with its Intelligent Functionality as a Feature (IFaaF – you heard it here first 😉) approaches and everything inbetween.

Reinforcement Learning from Human Feedback

What it is:

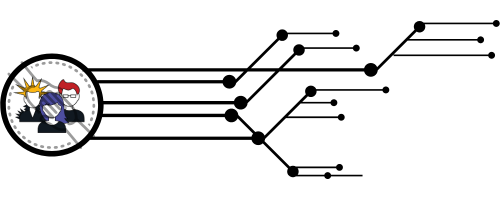

However, with the unsupervised approach that LLMs “learn”, achieving control and reinforcing that the models generate appropriate output from inferring the input can be difficult. Until now, this monitoring and feedback loop has been done via Humans – referred to as “Reinforcement Learning from Human Feedback” (RLHF). This involves, I am simplifying here, an appropriate reward mechanism to provide feedback to the model when it has produced something appropriate based on human preference, leading to the policy being optimised (Proximal Policy Optimisation (PPO)) resulting in the model being fine-tuned further.

RLHF is a method of integrating human judgement into the training process of LLMs. It involves a group of assessors who review the inputs provided to the model and the corresponding outputs generated. These assessors then rank these outputs based on their appropriateness, relevance, and alignment (from the most epic to absolute fails) with human values and preferences. This ranking assigns a score to the generated output. It acts as a feedback mechanism, essentially a reward system, which informs the model about the quality and suitability of its responses.

Policies:

It is important to note that a key step in LLM and subsequently RLHF is policies, which inform the models how they should behave when generating outputs. Policies in RLHF serve as guidelines for the model, directing it towards outputs that align with human values and preferences.

Employing RLHF:

By employing RLHF, the LLM is continuously fine-tuned, learning from the epically large data it was initially trained on and then the ongoing, dynamic human input. This human-in-the-loop approach ensures that the model’s outputs align more with human expectations and ethical standards (inherently flawed but lets tackle ethics at a later date); as it was trained on the internet, we all know it can be a dark place where we would not want the model to regurgitate certain human thinking… It’s a way of natively embedding the model with a nuanced understanding of human values, cultural contexts, and ethical considerations, which are crucial for the model’s effectiveness and reliability in real-world applications such as what we see in Dynamics 365.

The model then goes through iterative refinement as it receives more feedback and then updates its policy to increase the likelihood of choosing more appropriate outputs that humans will rate positively, thus maximising the score it receives. A crucial part of this iterative refinement and updating of the policy is ensuring a balance between exploration and exploitation, ensuring it can improve and adapt without being too weighted down by adjustments.

The issue with RLHF

As great as Reinforcement Learning from Human Feedback is, it does have many flaws:

- Resource scalability: as this approach requires, as it says on the tin, humans finding enough who have various opinions, knowledge and skills can be challenging. Especially once the workload intensity of reading through the different outputs and multiple outputs, weighing them up, and assigning rankings takes time, creating even more resource lag.

- Cost: The cost of hiring the specialised resources above adds to the already phenomenal cost of creating LLMs in the first place.

- Human perception and bias: our thoughts, opinions and assessments are subjective. We all have different life experiences, education levels, and world views, which means when we appraise something, what may be good for one person may not be suitable for others. This can introduce biases to the model when taking on the Human Feedback, potentially leading to a skewed model.

- Appropriate reward mechanisms: as mentioned above, human assessment can be difficult due to the varying perceptions – aligning a model to suit this, which an LLM can then take on to learn from, can be an incredibly tricky task. This only worsens when looking at even more subjective tasks such as humour, politeness, cultural insensitivity, etc. Then we need to consider consistency of appraisal with other assessors and lots more!

This is only name a few – there are many more! As you can see, this leads to a costly, time-consuming and complex approach.

Enter stage left: Direct Process Optimisation

Direct Preference Optimisation (DPO) is a new technique for moving a model towards human-preferred outputs by looking at the relationship between the reward mechanism and the optimal policies, hoping to drive that maximum scoring outcome. As we know, RLHF is an approach that leverages a reward mechanism and a Proximal Policy Optimisation approach to ensure the generated output does not stray too far from human preferences – trying to end in reward maximisation. DPO represents a paradigm shift from RLHF by focusing on optimising model outputs based on human preferences without the intermediary step of training a separate reward model – magical!

When looking how DPO works, it can be summarised in two key stages [1] Super Fine Tuning – where the model is fine-tuned on the focal data set that is needed for the model’s intended purpose [2] Preference Learning – where the model is fed preference data based on examples from the Fine Tuning stage, preference data is a selection of curated example outcomes that represent ideal outputs based on defined prompts. With access to the data plus the preference data, the model can see the patterns and nuances of consumable knowledge and preferred outcomes. Thus, it becomes the reward mechanism to know the desired output and can optimise the policy. It can compare the generated responses against the preferred ones.

- Context: A company develops a customer service chatbot using a large language model. The initial version, trained on vast internet data, is proficient in language but occasionally provides responses that are either too formal or not aligned with the company’s tone. We could mention DPD’s latest mishap here but we won’t 👀.

- RLHF Application: The company employs a group of human reviewers who interact with the chatbot. They provide feedback on its responses, focusing on tone, relevance, and alignment with the company’s customer service standards. Maybe DPD did do this, and they were happy with the responses…. 😂

- Reward Mechanism: For each interaction, the experts score the chatbot’s responses on a scale (for example, 1 to 10) based on their appropriateness. These scores serve as rewards or penalties, depending on their value. The higher the score, the more the model understands it as a positive outcome.

- Outcome: Over time, the chatbot’s responses become more aligned with the desired tone and content as it learns from the human feedback. The model’s policy is fine-tuned to prefer responses that match the reviewers’ preferences, leading to a more effective and user-friendly customer service tool. The reward mechanism ensures that the chatbot learns effectively from human feedback, aligning its outputs with what human experts deem most appropriate.

- Context: A health tech company is developing an AI application to provide personalised health and nutrition advice which is becoming more and more common these days. The initial model, while knowledgeable, often provides overly technical or generic advice, both of which are not useful for the users.

- DPO Application: The company starts by fine-tuning the model on a dataset focused on personalised health advice. Then, they introduce preference data—examples of ideal personalised advice curated by health experts. The model learns to recognise patterns in these examples and adjust its outputs accordingly.

- Outcome: The AI application begins to provide more personalised and contextually relevant health advice. Through DPO, the model directly learns from preferred outcomes, bypassing the need for a separate reward model and accelerating the development process.

Conclusive statement

With the ongoing race within Generative AI, any ability to make the training process more efficient and lead it to require less upfront capital will allow the models to accelerate their evolution at a much greater speed and allow other players to come to the market. It also has the potential to ensure more ethically aligned and user-centric AI systems. I really look forward to seeing what comes next!